DDL 是数据定义语言,DML 是数据操作语言。

数据导入

load 方式

语法: load data [local] inpath '' [overwrite] into table table_name [partition(partition_name=partition_value)]

load data:表示加载数据

local:表示从本地加载,如果没有则是从 hdfs 加载

inpath:表示加载数据的路径

overwrite:表示覆盖表中已有数据,没有则是追加

into table:追加数据

table_name:具体的表名

partition:分区信息

insert 方式

基本插入

语法:insert into table_name values (value1, value2..)

查询插入

语法:insert into table_name select field from_table where params

覆盖插入

语法:insert overwrite table_name

多模式查询(根据多张表查询结果)

语法:from table_name insert overwrite|into table table_name [partition] select fields [where params]

其中:insert overwrite|into table_name [partition] select fields [where params] 可以多次书写。

注意点:from table_name 在最前面,后面的 select 是不需要带 from table_name 的。

创建并插入

方式一:从其他表中查询数据并创建

语法:create table if not exists table_name as select fields from table_name

方式二:通过 location 指定从哪加载数据并创建

语法:create table if not exists table_name(column column_type) location '/data/path'

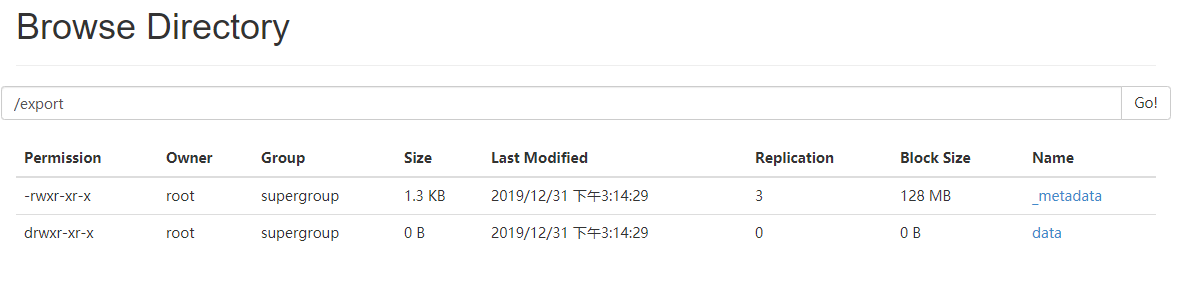

import 方式

注意:此操作必须先用 export 导出后,再将数据导入

语法:import table table_name [partition(partition_name=partition_value)] from '/file/path'

导入到一个已存在的表中(空表无结构)

1 | hive (default)> import table test from '/export'; |

导入到一个已存在的表中(空表有结构)

1 | hive (default)> import table people from '/export'; |

此时会报 schema 不匹配,原因:import 导入的是 export 的数据,此数据是带有元数据的。

导入到一个不存在的表中

1 | hive (default)> import table people1 from '/export'; |

数据导出

insert 导出

将查询结果导出的本地

语法:insert overwrite local directory '/local/file/path' select fields from table_name

1 | hive (default)> insert overwrite local directory '/opt/module/hive/tmp_data/dept' select * from dept; |

1 | [root@hadoop02 tmp_data]# cd dept/ |

1 | [root@hadoop02 dept]# cat 000000_0 |

可以看到此时导出的数据是没有格式的

将查询结果格式化后导出到本地

语法:insert overwrite local directory '/local/file/path' ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' select * from table_name

1 | hive (default)> insert overwrite local directory '/opt/module/hive/tmp_data/dept' ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' select * from dept; |

1 | [root@hadoop02 tmp_data]# cat dept/000000_0 |

将查询结果打出到 HDFS

语法:insert overwrite directory '/hdfs/file/path' ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' select * from table_name

1 | hive (default)> insert overwrite directory '/dept' ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' select * from dept; |

其他方式导出

hadoop 命令导出

语法:hadoop fs -get /hdfs/file/path /hdfs|local/file/path

Hive shell 导出

语法: bin/hive -e 'select * from table_name;' > /local/file/path

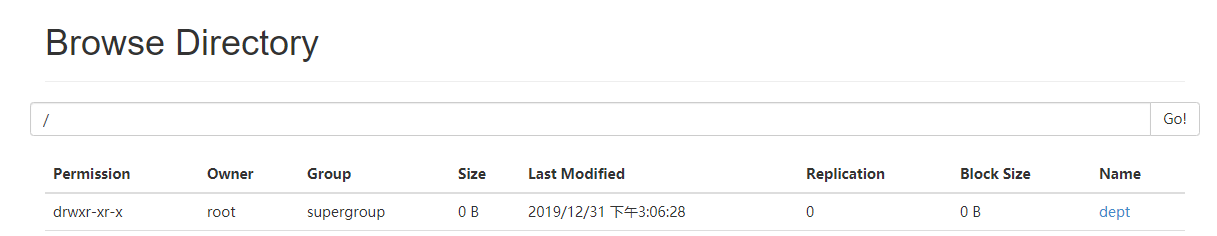

export 导出到 hdfs

语法:hive (default)> export table table_name to /hdfs/file/path

1 | hive (default)> export table dept to '/export'; |

清空表

语法: truncate table table_name

此操作只能删除管理表,不能删除外部表中的数据