创建表的时候,分为 管理表 和 外部表。管理表也称为 内部表。

管理表

默认创建的表都是管理表(内部表)。Hive 会控制表中数据的生命周期,Hive 默认情况下会将这些表的数据存储在由配置项 hive.metastore.warehouse.dir 所定义的目录的子目录下。

当我们删除一个管理表时,Hive 也会删除这个表中的数据。

管理表不适合和其他工具共享数据。

测试

创建普通表

1 | create table if not exists students( |

根据查询结果创建表(查询的结果会添加的新创建的表中)

1 | create table if not exists people2 as select name, friends, children, address from people; |

1 | hive (default)> create table if not exists people2 as select name, friends, children, address from people; |

1 | hive (default)> select * from people2; |

外部表

Hive 并不完全拥有这份数据。当删除表的时候,实际数据不会删除,但描述这个表的元数据会被删除。

测试

创建部门、员工两张表

1 | create external table if not exists default.dept( |

1 | create external table if not exists default.emp( |

导入数据

1 | hive (default)> load data local inpath '/opt/module/hive/tmp_data/dept.txt' into table dept; |

1 | hive (default)> load data local inpath '/opt/module/hive/tmp_data/emp.txt' into table emp; |

删除 dept

1 | hive (default)> drop table dept; |

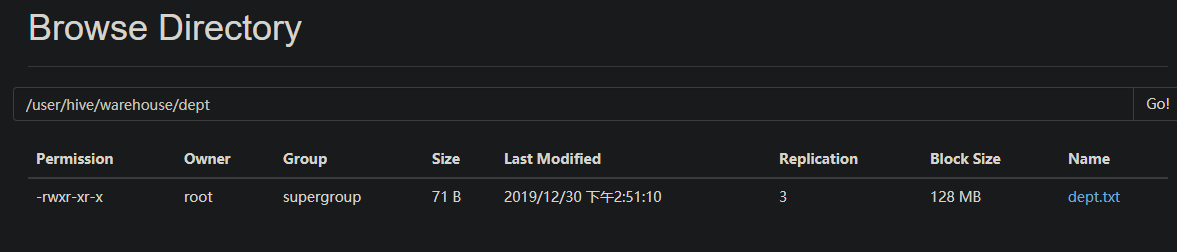

可以看到,删除表后,数据还在。

再次创建 dept 表

再次使用上面的建表语句,把 dept 表建出来,但是 不导入任何数据

使用查询语句查询 dept

1 | hive (default)> create external table if not exists default.dept( |

1 | hive (default)> select * from dept; |

可以看到,如果数据存在,那么创建表只要能指定到这个 HDFS 文件夹,那么就能查出来数据。

管理表与外部表的互相转换

查询表的类型

1 | hive (default)> desc formatted test; |

修改为外部表

1 | hive (default)> alter table test set tblproperties('EXTERNAL'='TRUE'); |

再次查看表类型

1 | hive (default)> desc formatted test; |

再次改为管理表

1 | hive (default)> alter table test set tblproperties('EXTERNAL'='FALSE'); |

注意点

EXTERNAL 必须为大写

设置是否是外部表只能通过 EXTERNAL 为 TRUE 或 FALSE 来控制'EXTERNAL'='FALSE'、'EXTERNAL'='TRUE',固定写法,区分大小写